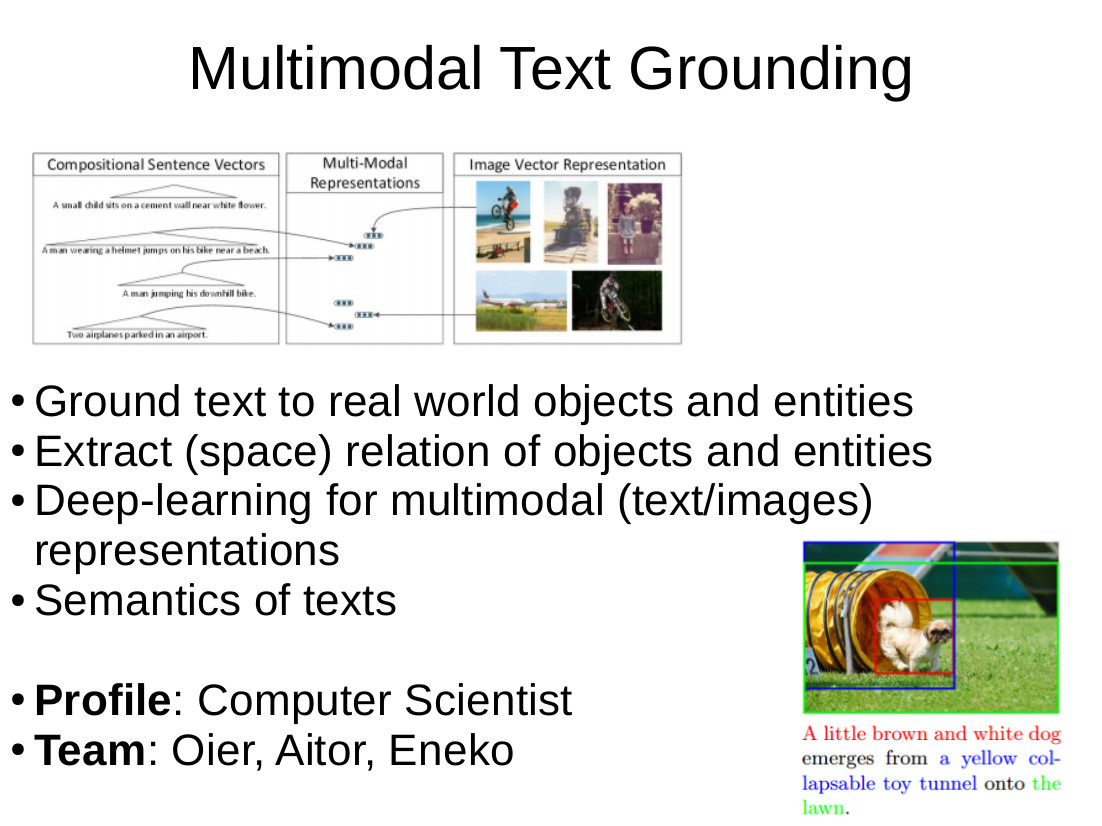

Multimodal Text Grounding

keywords:

Multimodal (text/images), Deep learning, Representational learning, Semantic Similarity, Word Sense Disambiguation

Objectives:

The objective of this thesis is to combine textual and visual information in a variety of NLP tasks. The student will use representational learning techniques to create models for words, senses and sentences using the implicit information present in text corpora and images. This visually grounded meaning representations will be further integrated into Deep Learning architectures to solve many NLP problems that require proper language understanding, such as measuring Semantic Textual Similarity or Word Sense Disambiguation.

Task:

- Learning semantically grounded representations for words, word senses and sentences.

- Applying deep learning techniques to combine visual and textual information.

- Applying deep learning techniques that use the multimodal representations of words and sentences to measure the semantic similarity of sentences including image captions.

- Applying deep learning techniques that use the multimodal representations of word senses to perform Word Sense Disambiguation.

References:

[1] E. Agirre, and P. Edmonds, eds. Word sense disambiguation: Algorithms and applications Vol. 33. Springer Science and Business Media,2007.

[2] K. Barnard, M. Johnson, and D. Forsyth. Word sense disambiguation with pictures. In Proceedings of the HLT-NAACL 2003 workshop on Learning word meaning from non-linguistic data, Volume 6. Association for Computational Linguistics, 2003.

[3] A. Krizhevsky, I. Sutskever, and G .E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems. 2012.

[4] K. Kurach, S. Gelly, M. Jastrzebski, P. Haeusser, O. Teytaud, D. Vincent, and O. Bousquet. Better Text Understanding Through Image-ToText Transfer. arXiv preprint arXiv:1705.08386. 2017 May 23.

[5] S. Gella, M. Lapata, and F. Keller. Unsupervised Visual Sense Disambiguation for Verbs using Multimodal Embeddings. In Proceedings of NAACL-HLT. 2016.

[6] J. Goikoetxea, E. Agirre, and A. Soroa. Random Walks and Neural Network Language Models on Knowledge Bases. In Proceedings of the Annual Meeting of the North American chapter of the Association of Computational Linguistics (NAACL HLT 2015), pages 1434-1439. 2015.

[7] Y. LeCun, Y. Bengio, and G. E. Hinton. Deep learning. Nature 521.7553 (2015): 436-444.

[8] W. May, S. Fidler, A. Fazly, S. Dickinson, and S. Stevenson. Unsupervised disambiguation of image captions. In Proceedings of the Sixth International Workshop on Semantic Evaluation, pages 85-89. 2012

[9] T. Mikolov, K .Chen, G .Corrado, and J .Dean. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781. 2013.

[10] Y. Peng, D. Z. Wang, I. Patwa, D. Gong, and C. V. Fang. Probabilistic Ensemble Fusion for Multimodal Word Sense Disambiguation. In IEEE International Symposium on Multimedia (ISM), pages 172-177. 2015.

[11] K. Saenko and T. Darrell. Filtering abstract senses from image search results. In Advances in Neural Information Processing Systems. 2009.

[12] D. Yuan, J. Richardson, R .Doherty, C .Evans, and E .Altendorf. Semisupervised word sense disambiguation with neural models. arXiv preprint arXiv:1603.07012. 2016).

[2] K. Barnard, M. Johnson, and D. Forsyth. Word sense disambiguation with pictures. In Proceedings of the HLT-NAACL 2003 workshop on Learning word meaning from non-linguistic data, Volume 6. Association for Computational Linguistics, 2003.

[3] A. Krizhevsky, I. Sutskever, and G .E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems. 2012.

[4] K. Kurach, S. Gelly, M. Jastrzebski, P. Haeusser, O. Teytaud, D. Vincent, and O. Bousquet. Better Text Understanding Through Image-ToText Transfer. arXiv preprint arXiv:1705.08386. 2017 May 23.

[5] S. Gella, M. Lapata, and F. Keller. Unsupervised Visual Sense Disambiguation for Verbs using Multimodal Embeddings. In Proceedings of NAACL-HLT. 2016.

[6] J. Goikoetxea, E. Agirre, and A. Soroa. Random Walks and Neural Network Language Models on Knowledge Bases. In Proceedings of the Annual Meeting of the North American chapter of the Association of Computational Linguistics (NAACL HLT 2015), pages 1434-1439. 2015.

[7] Y. LeCun, Y. Bengio, and G. E. Hinton. Deep learning. Nature 521.7553 (2015): 436-444.

[8] W. May, S. Fidler, A. Fazly, S. Dickinson, and S. Stevenson. Unsupervised disambiguation of image captions. In Proceedings of the Sixth International Workshop on Semantic Evaluation, pages 85-89. 2012

[9] T. Mikolov, K .Chen, G .Corrado, and J .Dean. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781. 2013.

[10] Y. Peng, D. Z. Wang, I. Patwa, D. Gong, and C. V. Fang. Probabilistic Ensemble Fusion for Multimodal Word Sense Disambiguation. In IEEE International Symposium on Multimedia (ISM), pages 172-177. 2015.

[11] K. Saenko and T. Darrell. Filtering abstract senses from image search results. In Advances in Neural Information Processing Systems. 2009.

[12] D. Yuan, J. Richardson, R .Doherty, C .Evans, and E .Altendorf. Semisupervised word sense disambiguation with neural models. arXiv preprint arXiv:1603.07012. 2016).

Team:

Eneko Agirre, Oier Lopez de Lacalle, Aitor Soroa

Profile:

Computer scientist

contact:

a.soroa[abildua|at]ehu.eus

Date:

2017